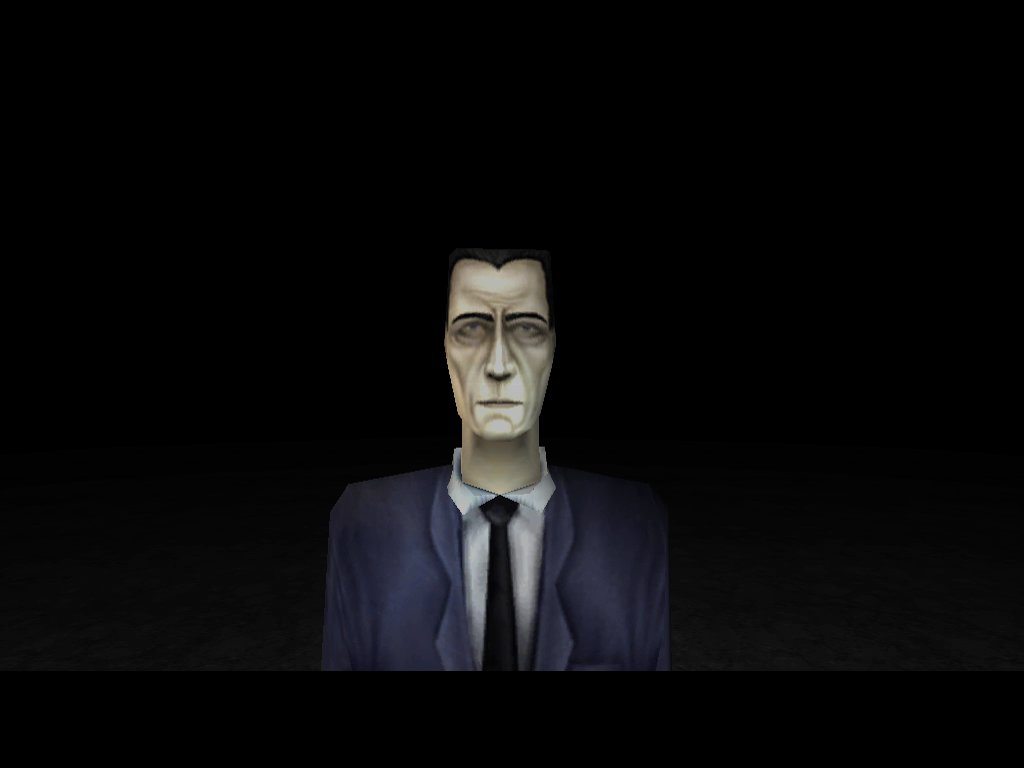

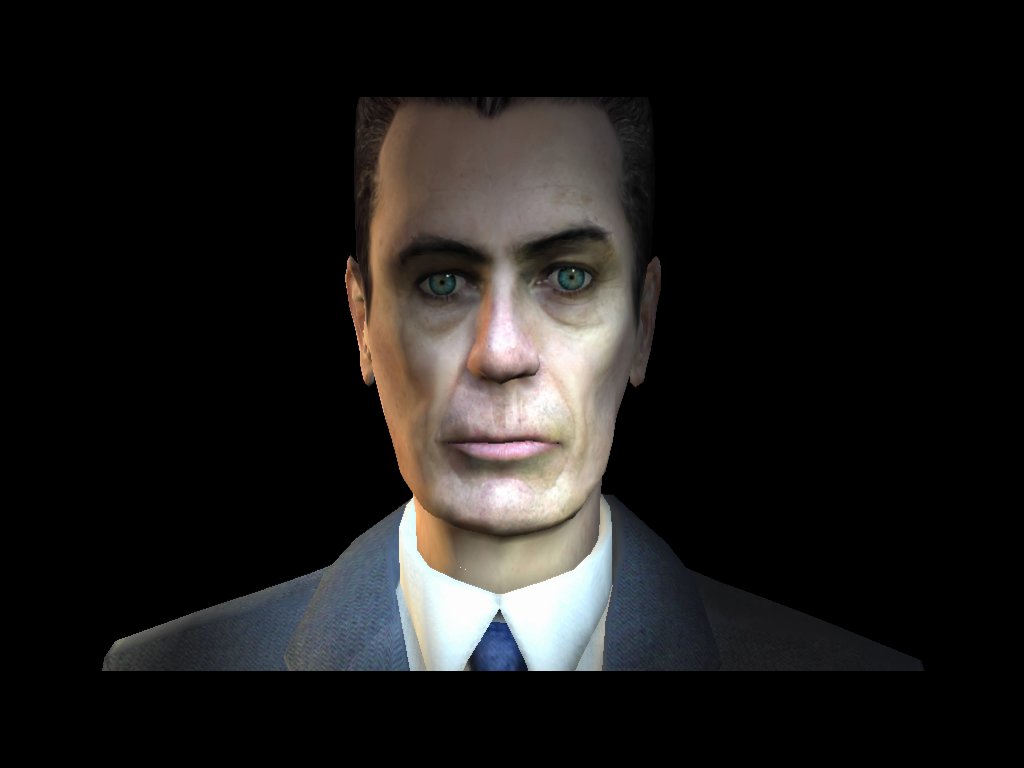

The Gman from the video game Half-Life 2

From its humble beginnings in the late 70's, the video games industry has sprouted into a 4 billion dollar a year industry, with no signs of slowing. As any person who played the older games like Pong, Pac-Man, or Galaga can tell you, the early video games were pretty primitive in their graphics capability. Objects and people in the game were rarely more than abstractions, usually barely resembling their counterparts. But as with any entertainment medium, the industry advanced at a rapid pace. As the technology improved, so did the ability for game designers to drift away from abstraction towards realism. Characters could become more humanlike in their actions. As this occurred, it became increasingly important to create characters that didn't just act as story tellers, but could be used to draw players into the game deeper emotionally.

Here we are in 2005. We are witnesses to some of the greatest achievements in the field of virtual humans. Almost every game released these days attempts to render characters in such a way as to elicit an emotional connection to them. How do they do this?

It became increasingly important to create characters who drew players deeper into the game emotionally.

One of the most important tools we use to connect with other humans is the recognition of facial patterns. Unconsciously, we all read the movements of the lips, eyes, eyebrows, cheeks and other facial attributes to ascertain the emotional being and motivations of a person. Without these signals, we have trouble figuring out is someone is happy, sad, angry, tired etc. Have you ever seen someone without eyebrows? It may take you a second to figure out what it is about them that might be unsettling, but once you notice, you have to readjust yourself in order to read their faces.

Armed with this knowledge, game designers have approached the issue of creating characters by using facial animation to make their characters look more real, to the point where they are approaching photo-realism. In today's games, we see characters who can look surprised and angry, and when they speak their lips move in harmony with the heard speech. All of these advancements add to the suspension of disbelief for the player and draws the player in deeper. As characters become more and more realistic, the barrier of the screen becomes less and less visible.

Do we believe the characters when we play? Do we feel emotion for the characters? Do we hate when we see the villian smirk as they run off with our [insert valuable item name here]? Do we feel sorry for our sidekick when he tells us with his dying breath to go get the bad guy? This is the Uncanny Valley effect at work. This essay looks at the evolution of the practice of facial animation to this point, and provides resources for looking at it more in-depth.

A brief history

I

n the beginning, there were blocks. And

the god of video games said "Let these blocks be used to create

abstract drawings of things." And it was ugly, but it was a start:

As the video games industry formed and the digital medium was in its infancy, developers had to rely on abstractions of the things and characters in their games, since the technology was too limited to do anything better. However, technology improved little by little, bring more and more lifelike qualities to the characters on screen.

When Nintendo introduced the Nintendo Entertainment System, or the NES, in 1985, game design and the gaming industry as a whole reinvented itself. While far from being lifelike, the drawing ability on the NES allowed content creators to work with far fewer limitations. Faces could now be drawn with some detail, reaching just shy of the quality of an animated cartoon. This is the era when new inroads to design could be explored. Around the same time companies were also beginning to work with 3D Computer generated graphics, eventually producing such consumer entertainment as Tron in 1982.

But the believability of characters was still largely left to the audience. Without any type of photo realistic quality about the characters, abstraction was still a designer's best friend.

A time for innovation

Along came id Software. In 1992 they released

Wolfenstein 3d. While not being a true 3d game, they introduced a new

paradigm to games: the freedom of movement from a first person

perspective. You no longer had to watch the action from an omniscient

perspective, you could get right into the action, up close and personal

with your enemies. With this new freedom of movement, it became

increasingly important to focus on the faces of the characters in game,

since now they would be constantly in front of you large as life.

Next they released Doom in 1995 which raised the bar technology and graphics wise, and allowed for even great freedom of movement. The problem was that both Wolfenstein and Doom still relied on pre-drawn images of characters as their representations, allowing viewing from only 8 different angles. Again, it was time for a change...

Along came quake in 1996, and a new era in game design was born. Gone were pre-drawn views of characters and objects in game, now replaced with 3d models that could be viewed from any angle. This allowed much more realistic perspectives inside of a game world, and the technology jumped from that point. New techniques in 3d modeling were constantly made, and enhancements to image quality, surface detail, and model animation were made at a staggering pace. This created the jumping off point where game designers could create proportionally correct human models and integrate all the aspects of game design to create believable characters. A big advance was the ability to create realistic looking surfaces through various polygon mapping and lighting techniques

By using advanced facial animation systems, designers could now apply instinctual human ability to read emotions from the faces of characters

Moving to the present

With

the advent of new technology came greater complexity in game design. No

longer would players settle for faces that didn't move when they spoke,

or only displayed a generic animation of the mouth opening and closing.

Pre-rendered cinematic scenes, similar to those from movies like Shrek

and Polar Express, were getting too expensive for decreasingly little

payoff. Developers began creating methods of making the characters in

the game that players interact with more realistic, allowing for deeper

emotional connections with characters. By utilizing advanced facial

animation systems in the character models, designers could now use

instinctual human ability to read emotions from the faces of characters,

instead of relying all on text and speech.

Valve Software, creators of the Source Engine and Half-Life 2, created a streamlined facial animation system which could be much more easily manipulated than using hand drawn animation or motion capture methods. By creating a muscle system that is included in the character models, they could make the face of the character react in the same ways as real human faces do. Eyebrows can move independently, the eyes move independently of the head, and the mouth can recreate all of our own contortions (video - right click and 'Save as...'). Other companies, such as OC3 Entertainment, have developed similar systems, where every aspect of the face can be manipulated, as well as a comprehensive lip-speech synching system.

The picture on the right shows the G-man as he existed in 1999's Half life. The picture on the left shows his evolution in the release of 2004's Half Life 2

The Future and Beyond

Where do

we go from here? The technology is available. Game designers are only

limited by their imaginations. Soon, we will see complex integrations of

multiple animation and artificial intelligence systems, bringing us to

the point where characters can grow increasingly independent of the

devloper's touch.

The technology for all these things exists or is being worked on now

Imagine a point where the player speaks into a microphone to a holographic image, while a camera records the facial details of the player looking for his or her emotional state. The computer system uses voice recognition and parses the player's speech, and then through the use of an artificial intelligence response system, replies to you, in a synthesized voice from a character who has the appropriate facial detail and reactions. Now imagine that the character is a spitting image of your closest friend. The technology for all these things exists or is being worked on now. When someone finally combines them, this scenario could be reality.

The possiblities are truly endless,and not just for games. This technology is at the forefront of creating automated customer interaction software, teaching tools, etc. We may even end up interacting with virtual humans more than the real thing? Does that make you feel Uncanny?

MORE ON VIRTUAL HUMANS: